Introduction

Both standard cameras (DSLRs, phone cameras) and our scientific cameras use the same processes to produce an image from light, involving the use of photodetectors and sensor pixels.

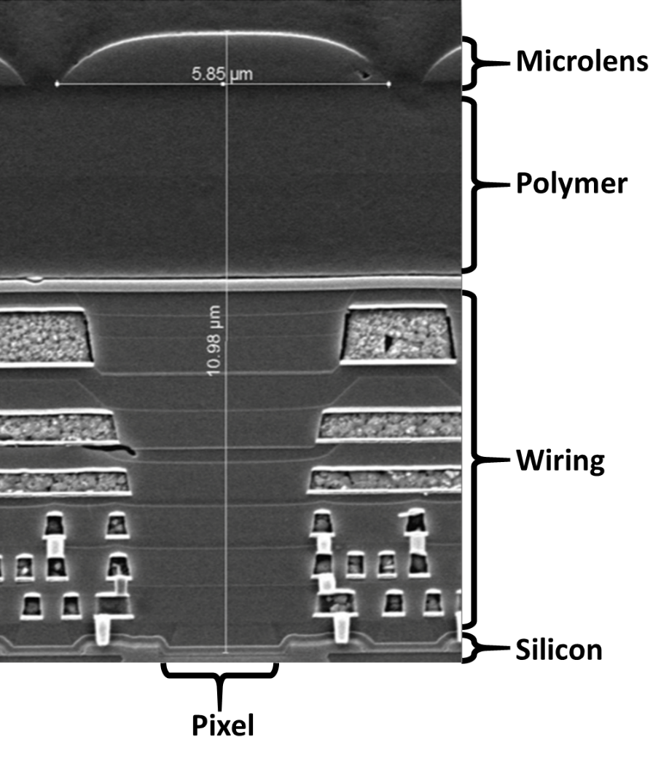

A sensor for a scientific camera needs to be quantitative, being able to detect and count photons (the basic unit of light), convert them into electrical signals, and then convert these into digital signals. This involves multiple steps, the first of which involves detecting photons. Scientific cameras use photodetectors made of silicon, where photons that hit the photodetector are converted into an equivalent amount of electrons.

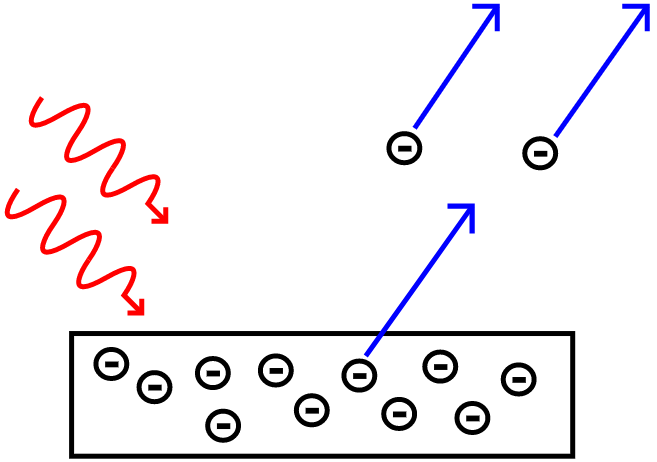

Photoelectric Effect

The act of producing electrons after an interaction with light is known as the photoelectric effect, as shown in Fig.1. This occurs when the electrons within the material absorb energy from the photons and have enough energy to escape their bonds and become ejected from the material. The materials used in camera sensors are typically semiconductors such as silicon or gallium.

Sensors in cameras typically consist of a very thin layer of silicon, meaning that when photons of light hit the silicon, they result in the production of an equivalent number of electrons. This means that sensors convert light to voltage (as electrons have an electric charge), and this voltage can be converted into an image by a computer.

Pixels

Having just one block of silicon means the sensor cannot determine where the photons came from, only that some photons hit the sensor. By making a grid of many tiny squares of silicon, you can detect photons and know where they came from (detection and localization). These tiny squares are referred to as pixels, namely sensor pixels, not the same as PC monitor or TV pixels. A pixel can be seen in Fig.2.

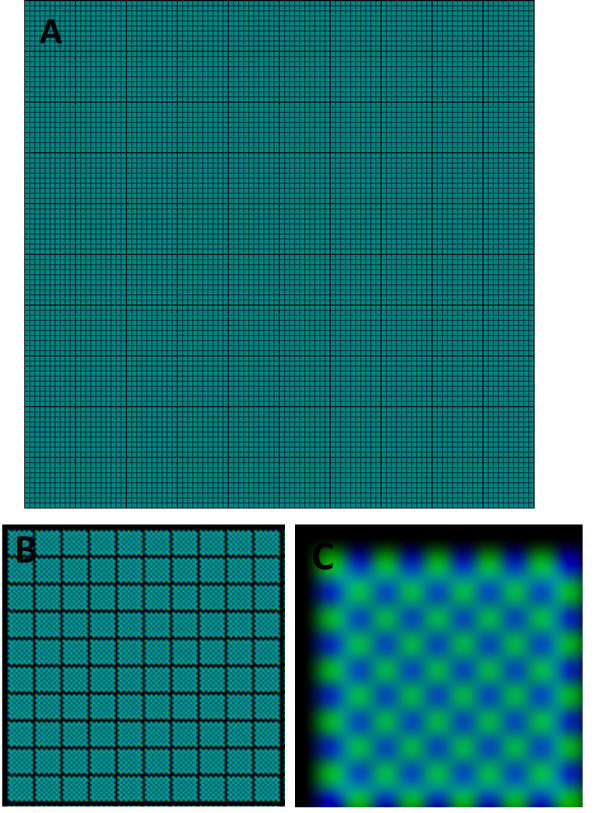

Technology has developed to the point where millions of pixels can fit onto a sensor. When a camera advertises as having 1 megapixel, this means the sensor is an array of 1000×1000 pixels, making up one million pixels (one megapixel). This concept is explored in Fig.3.

In general, the smaller the pixels on a sensor, the greater the resolution (the smallest features the camera can detect). But if pixels get very small in order to fit more onto a sensor, they cannot detect as much light, meaning that smaller pixels are less sensitive.

In addition, if sensors were too big or contained too many pixels it would massively increase the computational power needed to process the output information, which would slow down the image acquisition. Large information storage would be needed, and with scientists taking thousands of pictures in experiments lasting months/years, having a bloated sensor would quickly become an issue as the storage fills up. Due to these reasons, camera sensors are very carefully designed to be the optimum overall size, optimal pixel size and the optimal number of pixels.

Summary

Camera sensors are typically made of silicon split into millions of squares in a grid, these light-sensitive squares are pixels. When light hits a pixel, the material absorbs energy from the photons and releases an electron. These electrons are be stored, amplified and then converted into grey levels by the computer software, producing an image.